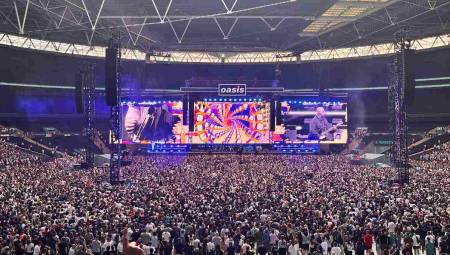

Latin America. AI already creates voices and videos that impersonate humans. Some criminals are using it to deceive citizens, pretending to be companies or institutions in order to get their money, as shown in this video.

As generative voice models mature, the "flaws" that give away a deepfake (synthetic audiovisual content or manipulated using generative AI models) are more subtle. The key is not to hear a robot, but to distinguish the algorithmic footprint from a mere bad connection.

This is explained by Josep Curto, professor at the Faculty of Computer Science, Multimedia and Telecommunications at the Open University of Catalonia (UOC), who offers practical signals to detect synthetic voices in real time, recommends tools and watermarks with their limits, and proposes an anti-fraud ABC for companies and administrations, online.

"As models are refined, the detection signals become more subtle," warns the expert. Most voice deepfakes fail in how the conversation sounds—prosody (intonation, accent, rhythm, intensity), pauses, suspiciously regular latencies—rather than in the texture of the timbre.

That's why, in everyday environments (an urgent call, a supposed bank notice, an impromptu video call), many people don't notice anomalies if they don't know what to look for and how to check it in the moment.

Curto underscores a golden rule: distinguish network artifacts from algorithmic imperfections. In a poor connection, the sound fluctuates and the audio/video lag is erratic; In synthetic content, "glitches" tend to be consistent (flat intonation or improbable pitch jumps, pauses placed where grammar doesn't expect them, "too equal" response latencies).

In a video call, the micro-signals of the face – blinking, shadows, detail of hair and ears – give away more than the lip-sync itself (temporal correspondence between lip movement and speech sound): when it is AI, small visual inconsistencies appear that are not explained by compression or bandwidth.

For a live test, the expert recommends breaking the inertia of the model: asking it to repeat an unexpected phrase, introducing context noise (a clap in front of the microphone, typing loudly) or interspersing short interruptions to force variation in prosody.

If unnatural intonation or constant latencies persist, the callback protocol to a verified number and safe word check is activated. "The best defence is human scepticism, more verification through a second channel and less public footprint of your voice", summarises the UOC professor.

Five reliable real-time signals

1. Unnatural prosody and flat intonation

The voice does not flow emotionally: there are uniform or misplaced pauses, monotonous tones or abrupt jumps. In bad network, you hear cuts or compression, but when the signal returns, the accent and basic intonation sound human.

2. Spectral artifacts ("metal", final clicks)

Hissing or anomalous glow in word queues; Audio too clean for the environment. In poor networking, noise and quality fluctuate, while AI artifacts tend to be consistent.

3. Lip-voice mismatch in video call

Constant delay or microabnormalities (lips that "float" over the teeth). In bad network there is a lag, but facial movement is still organic.

4. Strange micro-gestures

Scarce blinking, staring, flattened shadows and lighting, hair/ears with weird pixels. On bad network you'll see freezes or macropixelation typical of compression, not those fine details.

5. Suspicious latency

Delays that are too regular or sudden changes for no reason. Models take a fixed amount of time to "spit out" the full response; Poor network causes spotty latencies and "unstable connection" warnings.

Detectors and watermarks: useful, but not magical

The detection of synthetic audio is something of an ever-evolving arms race, Curto explains. The tools available focus on forensics artifacts that AI models have not yet learned to remove. Here are the two most promising solutions:

1) Forensic detection (classification models)

They analyze acoustic traits (spectral artifacts, unnatural prosody, etc.) to recognize AI training patterns.

ASVspoof Challenges: Reference sets (such as Logical Access LA, Physical Access PA) and metrics for training/comparing detectors. Error rates go up when spoofing (voice cloning) uses a different model than training.

Media tools (e.g. VerificAudio): used in newsrooms (PRISA Media) with a double layer of AI: synthetic signals + contextual verification. Non-public accuracy, varies by language; risk of false positives with highly compressed or noisy audio.

Platform detectors (e.g. ElevenLabs): reliable over your own audio; they do not generalize well to external generators (Google/Meta).

2) Watermarking

Strategy of labeling the generated content at source. Some ways to do this are:

AudioSeal (Meta): An imperceptible mark that allows spot detection (which parts have been altered). Available for free on GitHub. Vulnerable to MP3 compression, pitch-shift or reverb; false negatives with adversarial post-processing are growing.

SynthID (Google): multimodal brand (born in image; extends to audio and text). It seeks to be detectable after edits (cropping, compression). Its effectiveness depends on the standards (ISO/IEC) and adoption: if the generator does not implement it, it is useless.

Four (proactive) best practices to protect your voice

The best defense is human skepticism (prosody, context, movement), complemented by strong identity verification (key codes) and limiting public voiceprint exposure, Curto explains.

In the context of the research, it is studied how to create distortions that are imperceptible to a human, but that confuse the AI training algorithms that try to extract the voiceprint. The idea is to "poison" the training dataset without affecting human communication. This is the future of proactive protection.

The following are a series of good practices available to everyone:

Consent and privacy: not sharing recordings without a clear purpose; In corporate environments, require consent to record/analyze voice biometrics. Review the assistants (Alexa/Google) and disable continuous storage and the "help improve the service" function.

MFA (Multi-Factor Authentication) for voice verification: set unexpected, rotating and contextual "Security Code" / "Anti-Deepfake Phrase". Example: "What's the word of Tuesday?"

Manage public voiceprint: limit the publication of long and clear audios in open air. If they are published, lower the bitrate (amount of data processed per second measured in kbps) or add background music.

White noise/override technologies: Devices (e.g., HARP Speech Protector) or software with ultrasound/broadband noise that interfere with microphones. Expensive, limited in scope and with possible legal restrictions.

Practical and explained ABC for companies and administrations

When a call comes in with a sensitive request (payments, passwords, urgent changes), the ideal order to check is as follows:

A. Confirm who is speaking

Start with a human and contextual verification using a pre-agreed safe word. The response must be reviewed by a person—a supervisor or, at the very least, a non-automated secondary system—to prevent a model from generating a plausible replica without control.

B. If doubt persists, break the script

Apply a cross-callback (out-of-band verification or confirm someone's identity using a different communication channel): cut off matter-of-factly ("The quality is poor, I'll call you back now") and call a verified number that appears in your CRM/file (verified contact records). Never call back the incoming number. If the person attends through the expected channel/number and the context matches, authenticity is very likely. This step disarms many attempts because it forces the fraudster to control the second channel as well.

C. If it does not validate, it leaves a trace and scales

Activate the internal alert protocol for fraud attempt. Close the conversation with a security phrase ("By protocol we must end this call"), record the time, the apparent origin (even if it is false) and the anomalous signals observed ("flat prosody when answering the key", constant latency, etc.), and immediately raise it to the cybersecurity or legal department.

All this works only if the staff is trained: you have to train the detection of emotional changes and, above all, avoid giving in to urgency or pressure, typical tactics to prevent callbacks.